Introduction

In this lesson, we will introduce the hot cake of the field of Machine Learning. Neural Networks are models designed based on our brains. Human brains have about 86 billion cells connected together by synapses. A neural network also has many cells/units that are connected to one another. Whenever an input is given, it is propagated through the entire network of cells, reaching the output cells where certain actions are handled for certain outputs.

This lesson will help you build a neural network from scratch. Later, we will introduce TensorFlow which is the de facto standard for neural networks. So let's get started.

How do human brains work?

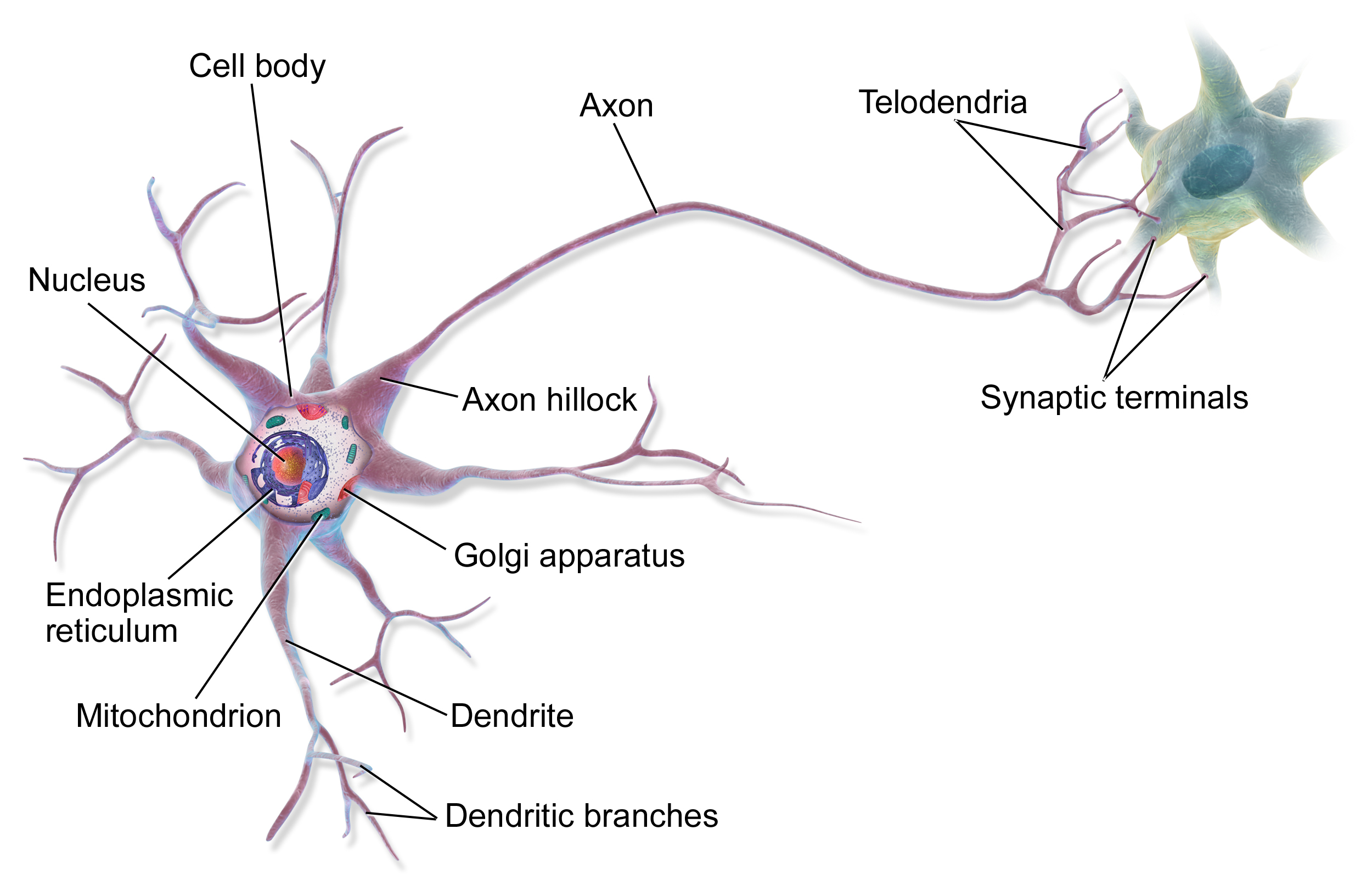

Nerve cells are at the center of everything when it comes to brain functionality. A nerve cell neuron is a special biological cell that processes information. According to an estimation, there is a huge number of neurons, approximately with numerous interconnections. There are basically 4 parts of a neuron, then helps it to process and transfer information:

- Synapses: Synapse is the connection point of two nerve cells.

- Dendrites: They are tree-like branches, responsible for receiving the information from other neurons it is connected to. In another sense, we can say that they are like the ears of neurons.

- Soma: It is the cell body of the neuron and is responsible for the processing of information, they have received from dendrites.

- Axon: With this part, a cell transfers information to another cell (like wires).

Here is an image showing the connection of two neurons and their different parts.

This is not a biology lesson, right? So why are we learning about brains? Because neural networks are nothing but brains created by humans. Below is the analogy in neural networks of what we have read about brains earlier:

- Synapses are weights in an artificial neural network (ANN).

- Dendrites are the input of a cell in ANN.

- Soma is a node or unit cell in ANN.

- Axon is the output of a cell in ANN.

Artificial Neuron

A neuron is a unit for computation in ANN. An ANN is nothing but a large network of neurons. There are different types of neurons, and there are many types of connections between neurons. All these varieties make different kinds of deep neural networks, some work well for tabular data, others work for images, and some work for sequential data like texts or speech.

Here is the anatomy of a neuron in ANN.

Let us suppose the input has n features. So the input is like (X1, X2, X3... Xn). When this input data (more precisely a tensor) is given to the neural network, each of the features is multiplied by a weight. Then, all of them are summed up along with an additional bias value. This sum is the output of the neuron. Most of the time, to control this sum, an activation function is used. We will go through all of these in more detail.

The above can be written as:

Linear Layer

You might be thinking that we have to loop through all the features and then multiply them with the corresponding weights to get the output. But this will take forever for a very simple task. Remember, there could be thousands of features in an input (think of an image of size 4000*2000, then the number of pixels is 8000000). And this is only for one neuron. A modern ANN contains hundreds of such neurons.

The power of parallel processing with TPUs and GPUs has made this computation very fast and efficient. Graphics Processing Units (GPU) and Tensor Processing Units (TPU) can do matrix manipulation almost as fast as the CPU does simple arithmetics between two numbers. So we need to convert the above equations into matrix algebra.

Think of the input as a matrix where each column represents a feature and each row represents a sample.

Now we need to multiply all the features by the same weight for all samples. So we need to multiply each column by a value. If we consider a vector of weights of the same size as the number of features in input, then we can do matrix multiplication among them to get the desired result along with the summation.

But what about the BIAS we talked about??

The bias can also be added very easily in this matrix multiplication. That bias is appended to the weight vector in the first place. And a column (like a new feature) of 1 is appended in the first of the matrix .

We have successfully understood the most basic part of deep learning, a layer of many unit cells. This is known as a linear layer. In deep learning, there will be a lot of layers joined together. Let's see how we can implement it in python.

1class LinearLayer():

2 # We will initialize W and b. They should be very small, so multiplying by 0.1

3 def __init__(self, input_size, output_size, activation=None, name=None):

4 self.W = np.random.randn(output_size, input_size) * 0.1

5 self.b = np.random.randn(output_size, 1) * 0.1

6

7 # Activation function are in the next section

8 self.activation = activation

9 if not activation:

10 self.activation = IdentityActivation()

11

12 # The is the prediction of the layer.

13 def forward(X):

14 self.A_prev = X.copy()

15 self.Z_curr = np.dot(self.W, X) + self.b

16 self.A_curr = self.activation.forward(self.Z_curr)

17 return self.A_curr.copy()

18

19## For our test purpose

20if __name__ = '__main__':

21 ll1 = LinearLayer(4, 1)

22 print(ll1.forward([

23 [1, 2, 3, 4]

24 ])Let's test your knowledge. Is this statement true or false?

Matrix multiplication is slower than for-loop multiplication because you also need to keep the order of the matrices.

Press true if you believe the statement is correct, or false otherwise.

Activation Function

As we said earlier, we also need activation functions to keep the output data in a diserable shape. There are a lot of activation functions used in deep learning. Let us discuss a few popular of them.

Identity Function

This is the simplest activation function. Sometimes you do not need to use any activation function for a specific layer. But to hold consistency across all layers, you need to create and store a dummy activation function for that layer. That activation function is the Identity function, which outputs whatever it is given as input.

1class IdentityActivation():

2 def forward(X):

3 return XLinear

When the output value is proportional to the input value anywhere in the input domain, then the function is said to be a linear function. So, a linear function is just multiplying something with the input.

1class LinearActivation():

2 def __init__(self, a):

3 self.a = a

4

5 def forward(X):

6 return a*XSigmoid

Now the activation function will start to get interesting. The main objective of a sigmoid is to keep the output data between 0 and 1. The equation of this function is:

1class SigmoidActivation():

2 def forward(X):

3 return 1/(1+np.e**(-X))Tanh

This activation function is very similar to sigmoid. The only difference is that the range of this function is [-1, 1]. And the equation used tanh function.

1class TanhActivation():

2 def forward(X):

3 return np.tanh(X)Binary Step

To make sure that the output is binary, a binary step activation function is used. This is mostly used when you are doing a binary classification. When the input is negative, the activation function outputs 0. And when it is positive, it outputs 1.

1class BinaryStepActivation():

2 def __init__(self, thresh=0):

3 self.thresh = thresh

4

5 def forward(X):

6 out = np.ones_like(X)

7 out[X <= 0] = 0

8 return outReLU

Rectified Linear Unit Activation function has recently become popular for working very well with image datasets. It filters all the negative values to 0. For acts as a Linear Activation function for all the positive values.

1def ReLUActivation():

2 def __init__(self, a):

3 self.a = a

4 def forward(X):

5 out = X.copy()

6 out[X <= 0] = 0

7 out *= self.aLeaky ReLU

Leaky ReLU is similar to ReLU, but it acts like another activation function instead of 0 when X is negative.

1class LeakyReLUActivation():

2 def __init__(self,a,b):

3 self.a = a

4 self.b = b

5 def forward(X):

6 out = X.copy()

7 out[X <= 0] *= self.b

8 out[X > 0] *= self.aSoftMax

SoftMax is a very different kind of activation function mostly used for multiclass classification. It takes all the outputs and then converts them to probability. The output of the softmax function will always add up to 1. And all the values of the output will be always non-negative.

As this is not a continuous function and depends on the individual value of x, it is quite difficult to plot on a 2D surface.

1class SoftMaxActivation():

2 def forward(X):

3 return np.e**x / np.sum(np.e**x)Try this exercise. Click the correct answer from the options.

Which of the activation function's output is limited within 0 and 1?

Click the option that best answers the question.

- tanh

- sigmoid

- ReLU

- Leaky ReLU

Build your intuition. Click the correct answer from the options.

Which of the activation function has a range between negative and positive infinity?

Click the option that best answers the question.

- tanh

- sigmoid

- ReLU

- Leaky ReLU

Neural Network with Feed Forward

So we have created linear layers and activation functions with forward methods. Our final class will be to create a NeuralNet which will contain everything it needs and provide an API to the user.

We will follow the Scikit-Learner API style for this. So there will be a predict method and a fit method (let's not worry about all other utility methods for now). As we have just implemented the forward methods for all the classes, let's create the predict method using those forward methods of all classes.

1class NeuralNet():

2 def __init__(self, layers=[]):

3 self.layers = layers

4

5 def predict(X):

6 for layer in layers:

7 A = layer.forward(A)

8 return AThis looks easy, right? But now we will implement a little difficult part of the NeuralNet. The Backward Propagation.

Loss function

While training our neural network, we will need to calculate the loss depending on the prediction of the network, and target labels. We can simply use a function to implement the loss function equation of categorical cross-entropy.

1def categorical_crossentropy(target, output):

2 output /= output.sum(axis=-1, keepdims=True)

3 output = np.clip(output, 1e-7, 1 - 1e-7) # So that weights dont become 0

4 return np.sum(target * -np.log(output), axis=-1, keepdims=False)Backward Propagation

We have already learned about the gradient descent algorithm in lesson 4. We will discuss the same and apply it to the neural networks backpropagation method. We already have the NeuralNet class along with other layers. All we need to do is add a new method called backward to each of them so that it is possible to do backward propagation to the network.

What will the backward method do in each case? The backward method will output the gradient of the input, with respect to W. The input we just mentioned is not X, it is the gradient we got from another layer.

Remember, gradient descendent algorithm and backward propagation are different algorithms. The first one is used only to get the gradient of the loss with respect to W. The second one is responsible for updating the weights using that gradient taken from the first algorithm.

You will understand this better if you take a look at the following equations. Nerd Alert! Calculus Ahead.

$

After calculating gradients of all the layers and activation functions using the above equations, we can update the weights via:

Let us implement this in python. We are only implementing it on the LinearLayer class, sigmoid, and relu activation functions. You can look at the derivative of other activation functions here.

1class SigmoidActivation():

2 # ...

3 def backward(self, A):

4 # Just the derivative

5 return A*(1-A)

6

7class ReLUActivation():

8 # ...

9 def backward(self, A):

10 A = A.copy()

11 A[A <= 0] = 0;

12 A *= self.a

13 return A;

14

15class LinearLayer():

16 # ...

17 def backward(dA_curr, lr=0.001):

18 m = A_prev.shape[1]

19

20 dZ_curr = self.activation.backward(dA_curr, self.Z_curr)

21 dW_curr = np.dot(dZ_curr, self.A_prev.T) / m

22 db_curr = np.sum(dZ_curr, axis=1, keepdims=True) / m

23 dA_prev = np.dot(self.W.T, dZ_curr)

24

25 self.W -= lr * dW_curr

26 self.b -= lr * db_curr

27

28 return dA_prevNow that we have the method backward for all classes, we can implement the fit method in our NeuralNet class.

1class NeuralNet():

2 # ...

3 def fit(X, y, lr=0.001, epoch=1):

4 for i in range(epoch):

5 for layer in self.layers:

6 X = layer.forward(X)

7

8 dA_curr = categorical_crossentropy(y, X)

9

10 for layer in reversed(self.layers):

11 dA_curr, = layer.backward(dA_curr, lr=self.lr)Now the only work left is to instantiate and train the model. We will train it on the iris dataset we discussed in the Scikit-learn chapter.

1from sklearn.datasets import load_iris

2from sklearn.metrics import accuracy_score # To calculate accuracy

3

4iris = load_iris()

5

6net = NeuralNet([

7 LinearLayer(4, 10, activation=ReLUActivation()),

8 LinearLayer(10, 5, activation=ReLUActivation()),

9 LinearLayer(5, 3, activation=SoftMaxActivation()),

10])

11

12# We do preprocessing like the last lesson

13X_train, X_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.25, random_state=42)

14

15net.fit(X_train, y_train)

16pred = net.predict(X_test)

17print(accuracy_score(pred, y_test))Conclusion

Congratulations. You can successfully create a full-fledged Neural Network architecture. The network has many activation functions, can input 2-dimensional data with any shape, and can be as deep or as wide as you need.

I understand that all of the code and the mathematical backgrounds are tough for you. In real life, you do not have to do any of these at all. In the next lesson, we will learn TensorFlow. Then you will realize how easy it is to create and train a deep learning model within as small as 10 lines of code.

One Pager Cheat Sheet

- Neural Networks are models, designed based on our brains, that

propagateinputs through manycells/unitstohandle certain outputswhen given an input. - Nerve cells process and transfer information through

Synapses,Dendrites,SomaandAxonto work, which is effectively simulated inArtificial Neural Networks (ANNs)to mimic the operation of a human brain. - A neuron in an Artificial Neural Network (ANN) computes an output (activation) by multiplying the inputs (

tensor) with weights and adding abias, and then often applying an activation function to the sum. A Linear Layer is an efficient way of implementing a single neuron using matrix multiplication, which makes it possible to do parallel processing with GPUs and TPUs.- Matrix multiplication is more efficient than for-loops due to its ability to take advantage of

parallel processingand be performed in anyrandom order. - An

activation functionis used tokeep the output datain a desired shape in deep learning. - The

IdentityActivationfunction returns the given inputXas its output. - A linear function is

multipliedby an inputXand produces an output which is proportional to the input for all values of the input domain, which can be seen in the equation f(x) = a*x. - The

Sigmoid activation functiontakes in a value and produces a output between 0 and 1 to classify the data, represented by the equationf(x) = 1/(1+e^-x). - The

Tanhactivation function ranges from -1 to 1 and is calculated using thetanh()function. - The activation function

Binary Stepis a threshold-based activation used for binary classification, which outputs 0 if the input is negative, and 1 if it is positive. TheReLUActivation function filters negative values to 0 and acts as a linear function with all the positive values.- Leaky ReLU is a type of activation function defined by the equation

f(x) = b*xwhenx <= 0anda*xwhenx > 0, withb < a, which can be implemented in Python through theLeakyReLUActivationclass. - The SoftMax activation function is a complex non-continuous function which helps convert outputs to probabilities and is mostly used for multiclass classification, demonstrated by the

Pythoncode snippet provided. - The

sigmoid activation functionprovides outputs between 0 and 1, while SoftMax converts all outputs to a total probability of 1. - The Leaky ReLU activation function is defined by

f(x) = axwhenx<0andf(x) = xwhenx>=0, with a range of negative to positive infinity, which is in contrast to other activation functions such as theSigmoid. - We have created a NeuralNet class which provides an API for the user to

predictusing theforwardmethods of other classes. - We can calculate the loss of our neural network with the

categorical_crossentropyfunction, which performs the Categorical Cross-Entropy equation. - We can implement backpropagation in neural networks by adding a

backward()method that outputs the gradient of the input with respect to W and by using gradient descent algorithm in combination with backward propagation to update the weights and biases. - With a few lines of code in TensorFlow, you can easily create and train a deep learning model to input 2-dimensional data of any shape.